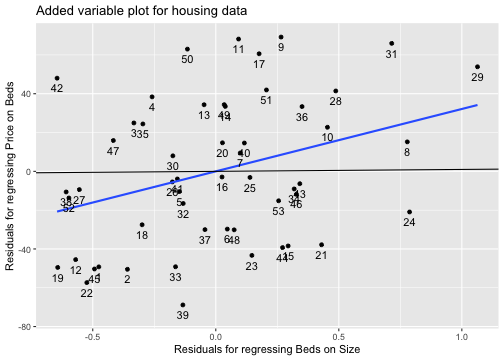

class: center, middle, inverse, title-slide .title[ # MLR Comparing Models and Identifying Interesting Data Points ] .subtitle[ ## Stat 21 ] .author[ ### Suzanne Thornton ] .institute[ ### Swarthmore College ] --- <style type="text/css"> pre{ background: #FFBB33; max-width: 100%; overflow-x: scroll; } .scroll-output{ height: 70%; overflow-y: scroll; } .scroll-small{ height: 30%; overflow-y: scroll; } .red{color: #ce151e;} .green{color: #26b421;} .blue{color: #426EF0;} ## Why isn't this running???? </style> # Decisions about predictors ## Nested F test Nested F-tests can test whether or not a group of predictor *terms* have zero coefficients in a multiple regression model. This is particularly useful when assessing whether or not to include a categorical predictor or higher order polynormial terms. Consider the `CrabShip` data which has a categorical predictor `Noise`. ```r data(CrabShip) CrabShip %>% head ``` ``` ## Mass Oxygen Noise ## 1 22.7 89.2 ambient ## 2 34.6 141.1 ambient ## 3 36.0 140.1 ambient ## 4 40.1 204.9 ambient ## 5 47.5 129.1 ambient ## 6 49.6 154.6 ambient ``` --- # Decisions about predictors ## Nested F test If we want to test whether or not `Noise` is a significantly non-zero predictor for `Oxygen`, we can consider the model with and without this predictor. Note that the null hypothesis in the `anova` test below is that `\beta_j=0` for every `j=1,...,k-1` where `k=` the number of levels for the categorical predictor `Noise`. ```r full <- lm(Oxygen ~ Mass + Noise, CrabShip) red <- lm(Oxygen ~ Mass, CrabShip) anova(red, full) ``` ``` ## Analysis of Variance Table ## ## Model 1: Oxygen ~ Mass ## Model 2: Oxygen ~ Mass + Noise ## Res.Df RSS Df Sum of Sq F Pr(>F) ## 1 32 89954 ## 2 31 42516 1 47438 34.589 1.721e-06 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # Decisions about predictors ## Compare adjusted coefficient of determination We can also make decisions about whether or not to include certain predictor terms or variables by assessing the adjusted `\(R^2\)` values for each model under consideration. Such comparison is a statistical estimation problem, not an inferential problem. ```r summary(full)$adj.r.squared ``` ``` ## [1] 0.6038188 ``` ```r summary(red)$adj.r.squared ``` ``` ## [1] 0.1879705 ``` --- # Decisions about predictors ## Mallow's Cp Another useful summary statistic to compare models is Mallow's `\(C_p\)`. This is a comparative summary statistic when assessing a model with `\(m\)` predictors compared to a larger model with `\(k\)` predictors ($1<m<k$). **Just like** in the nested F-test, the models being compared **must** be nested. A smaller value of this summary statistic is preferable as it is a relative measure of terms involving the sum squares of the models' residuals. ```r library(olsrr) ols_mallows_cp(red, full) ``` ``` ## [1] 35.58853 ``` --- # Identifying unsusal data points ## Added varb plots Added variable plots are useful when we want to understand relative effects of a particular predictor variable, given that all other predictors have been accounted for in the model. In particular, for MLR models this is a useful technique to identify potentially influential data points that are unusual only if we consider the values of another/other predictor variable(s). ```r data(HousesNY) HousesNY %>% head ``` ``` ## Price Beds Baths Size Lot ## 1 57.6 3 2 0.960 1.30 ## 2 120.0 6 2 2.786 0.23 ## 3 150.0 4 2 1.704 0.27 ## 4 143.0 3 2 1.200 0.80 ## 5 92.5 3 1 1.329 0.42 ## 6 50.0 2 1 0.974 0.34 ``` --- # Identifying unsusal data points ## Added varb plots Suppose the predictor of interest is `Size`. The larger model includes `Beds`, `Lot`, and `Size`. .scroll-small[ ```r mod0 <- lm(Price ~ Beds + Lot + Size, HousesNY) mod0 %>% summary ``` ``` ## ## Call: ## lm(formula = Price ~ Beds + Lot + Size, data = HousesNY) ## ## Residuals: ## Min 1Q Median 3Q Max ## -64.549 -28.878 0.228 29.280 68.743 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 37.899 24.397 1.553 0.1268 ## Beds 5.022 9.567 0.525 0.6020 ## Lot 5.934 6.783 0.875 0.3859 ## Size 32.142 12.696 2.532 0.0146 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 36.3 on 49 degrees of freedom ## Multiple R-squared: 0.2766, Adjusted R-squared: 0.2324 ## F-statistic: 6.247 on 3 and 49 DF, p-value: 0.001121 ``` ] --- # Identifying unsusal data points ## Added varb plots The smaller model includes only `Beds` and `Lot`. ```r mod1 <- lm(Price ~ Beds + Lot, HousesNY) mod1 %>% summary ``` ``` ## ## Call: ## lm(formula = Price ~ Beds + Lot, data = HousesNY) ## ## Residuals: ## Min 1Q Median 3Q Max ## -68.850 -30.035 -5.439 33.385 69.208 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 32.652 25.590 1.276 0.20787 ## Beds 22.803 6.838 3.335 0.00162 ** ## Lot 4.429 7.113 0.623 0.53634 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 38.21 on 50 degrees of freedom ## Multiple R-squared: 0.182, Adjusted R-squared: 0.1493 ## F-statistic: 5.563 on 2 and 50 DF, p-value: 0.006586 ``` --- # Identifying unsusal data points ## Added varb plots Added variable plots would consider the effect of the predictors from the smaller model `Beds` and `Lot` in relation to the predictor of interest, `Size`. ```r mod2 <- lm(Size ~ Beds + Lot, HousesNY) mod2 %>% summary ``` ``` ## ## Call: ## lm(formula = Size ~ Beds + Lot, data = HousesNY) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.64501 -0.29638 0.02711 0.26535 1.06363 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -0.16325 0.27079 -0.603 0.549 ## Beds 0.55318 0.07236 7.645 5.92e-10 *** ## Lot -0.04683 0.07527 -0.622 0.537 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.4044 on 50 degrees of freedom ## Multiple R-squared: 0.5601, Adjusted R-squared: 0.5425 ## F-statistic: 31.83 on 2 and 50 DF, p-value: 1.211e-09 ``` --- # Identifying unsusal data points ## Added varb plots Let's collect the residuals from the smaller model and the residuals from the regression of the predictor of interest (`Size`) on the predictors of the smaller model in a new data object. ```r added_varb_plot_data <- tibble(res1 = mod1$residuals, res2 = mod2$residuals) added_varb_plot_data %>% head ``` ``` ## # A tibble: 6 × 2 ## res1 res2 ## <dbl> <dbl> ## 1 -49.2 -0.475 ## 2 -50.5 -0.359 ## 3 24.9 -0.333 ## 4 38.4 -0.259 ## 5 -10.4 -0.148 ## 6 -29.8 0.0468 ``` --- # Identifying unsusal data points ## Added varb plots Now we'll create an added variable plot by plotting these two groups of residuals against one another. We want to look out for any strange data points to investigate them as potentially influential data points. ```r ggplot(added_varb_plot_data, aes(x=res2, y=res1)) + geom_point() + geom_smooth(method=lm, se=FALSE) + geom_abline(intercept=0) + geom_text(label=rownames(added_varb_plot_data), nudge_y = -5) + labs(title="Added variable plot for housing data", x="Residuals for regressing Beds on Size",y="Residuals for regressing Price on Beds") ``` --- # Identifying unsusal data points ## Added varb plots <!-- --> --- # Identifying unsusal data points ## Added varb plots Notice that the slope of the line of best fit for the added variable plot is actually the coefficient of the predictor `Size` in the larger model containing all three predictors. ```r resids_mod <- lm(res1 ~ res2, added_varb_plot_data) resids_mod %>% summary() ``` ``` ## ## Call: ## lm(formula = res1 ~ res2, data = added_varb_plot_data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -64.549 -28.878 0.228 29.280 68.743 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 4.153e-15 4.887e+00 0.000 1.0000 ## res2 3.214e+01 1.244e+01 2.583 0.0127 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 35.58 on 51 degrees of freedom ## Multiple R-squared: 0.1157, Adjusted R-squared: 0.09834 ## F-statistic: 6.672 on 1 and 51 DF, p-value: 0.01271 ``` --- # Identifying unsusal data points ## Leverage `$$Leverage_i = h_i = \frac{1}{n} + \frac{x_i - \bar{x}}{\sum (x_i - \bar{x})^2}$$` In R, we use the function `hatvalues()` to calculate the leverage for each data point. For example, in `mod0` with the housing data: ```r mod0 %>% hatvalues ## Note that the input is the model, not the data ``` ``` ## 1 2 3 4 5 6 7 ## 0.05746567 0.24215674 0.04928657 0.03208284 0.03363332 0.09784971 0.10144826 ## 8 9 10 11 12 13 14 ## 0.11165497 0.04227226 0.06046648 0.03086568 0.06384987 0.02505667 0.03040575 ## 15 16 17 18 19 20 21 ## 0.05021722 0.06730199 0.04452998 0.08379383 0.09010030 0.02873509 0.05678601 ## 22 23 24 25 26 27 28 ## 0.07083918 0.10057508 0.22117033 0.02912529 0.08259537 0.08243321 0.06281797 ## 29 30 31 32 33 34 35 ## 0.17384227 0.04289596 0.11560486 0.26386171 0.04064779 0.06285799 0.04447776 ## 36 37 38 39 40 41 42 ## 0.12277182 0.03894932 0.06969216 0.03600822 0.04238490 0.11157827 0.08224534 ## 43 44 45 46 47 48 49 ## 0.04819574 0.04266616 0.06344810 0.03759056 0.05666408 0.11541469 0.03121585 ## 50 51 52 53 ## 0.07859199 0.10368117 0.07984430 0.04535338 ``` --- # Identifying unsusal data points ## Leverage Leverage is actually used in the calulations of the standardized and studentized residuals. * Standardized residuals: `\(\frac{y_i - \bar{y}}{\hat{\sigma}\sqrt{1 - h_i}}\)` We can calculate the standardized residuals using the `rstandard()` function in R. * Studentized residuals: `\(\frac{y_i - \bar{y}}{\hat{\sigma}_{(i)}\sqrt{1 - h_i}}\)`, where `\(\hat{\sigma}_{(i)}\)` is the estimate from the model that doesn't include the `\(i^{th}\)` data point. We can calculate the studentized residuals using the `rstudent()` function in R. .footnote[Note, the input of these functions in R should be the *model* not the data.] --- # Identifying unsusal data points ## Leverage Other measures of influence include * Cook's distance can be calulated using the function `cooks.distance()` in R (where again, the input is the model, not the data). ```r mod0 %>% cooks.distance ``` ``` ## 1 2 3 4 5 6 ## 1.413538e-02 1.213439e-01 1.314107e-02 1.418003e-02 2.201015e-04 2.230206e-02 ## 7 8 9 10 11 12 ## 9.345626e-04 2.584234e-03 3.219548e-02 8.582777e-04 2.651157e-02 1.021610e-02 ## 13 14 15 16 17 18 ## 6.434225e-03 6.377695e-03 2.423212e-02 2.046011e-04 2.793853e-02 6.072641e-03 ## 19 20 21 22 23 24 ## 1.721907e-02 1.109198e-03 3.230200e-02 2.552244e-02 5.439213e-02 1.479885e-01 ## 25 26 27 28 29 30 ## 3.379619e-04 9.693781e-07 1.314690e-03 9.005140e-03 1.877188e-02 1.647495e-03 ## 31 32 33 34 35 36 ## 5.181849e-02 1.399116e-02 1.615643e-02 5.060605e-03 1.065972e-02 1.480775e-02 ## 37 38 39 40 41 42 ## 6.554121e-03 1.216308e-03 3.063237e-02 1.036617e-03 3.217909e-05 8.755088e-02 ## 43 44 45 46 47 48 ## 3.036361e-03 2.036300e-02 1.630211e-02 3.840482e-03 1.035753e-02 2.962539e-02 ## 49 50 51 52 53 ## 6.984666e-03 7.812609e-02 3.058865e-02 5.320046e-04 5.160044e-03 ```