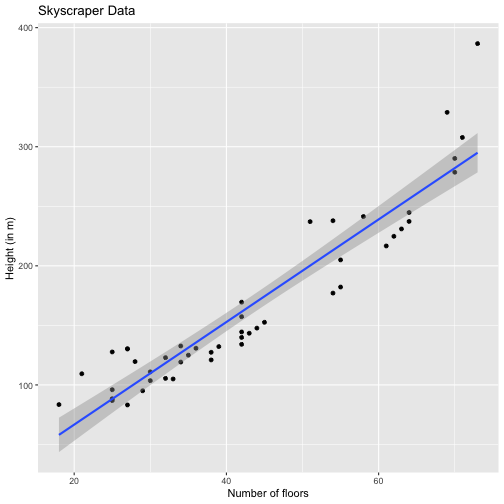

class: center, middle, inverse, title-slide .title[ # Simple linear regression worksheet ] .subtitle[ ## Statistical Methods II (Stat 021) ] .author[ ### Suzanne Thornton ] .institute[ ### Swarthmore College ] .date[ ### (updated: 2023-07-31) ] --- <style type="text/css"> pre { background: #FFBB33; max-width: 100%; overflow-x: scroll; } .scroll-output { height: 60%; overflow-y: scroll; } .scroll-small { height: 30%; overflow-y: scroll; } .red{color: #ce151e;} .green{color: #26b421;} .blue{color: #426EF0;} </style> ## Group work ### Part I - Building and interpreting a SLR model The following data set was collected in 2018-2019 and recorded different attributes of skyscrapers in NYC. We are going to investigate how the height (in meters) `\((Y)\)` of a skyscraper depends on the number of stories (i.e. floors) it has `\((x)\)`. ```r skyscrapers <- read_csv("~/GitHub/Stat21/Data/skyscraper_data.csv") # url("http://www.swarthmore.edu/NatSci/sthornt1/DataFiles/skyscraper_data.csv")) head(skyscrapers) ``` ``` ## # A tibble: 6 × 8 ## ID Building_name height_met…¹ heigh…² floors year mater…³ purpose ## <dbl> <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr> ## 1 1 30 Hudson Yards 387. 1268 73 2019 concre… office ## 2 2 3 World Trade Center 329. 1079 69 2018 compos… office ## 3 3 35 Hudson Yards 308. 1010 71 2019 concre… reside… ## 4 4 220 Central Park South 290. 952 70 2019 concre… reside… ## 5 5 15 Hudson Yards 279. 914 70 2019 concre… reside… ## 6 6 The Centrale 245. 803 64 2019 concre… reside… ## # … with abbreviated variable names ¹height_meters, ²height_ft, ³material ``` --- ## Group work ### Part I - Building and interpreting a SLR model .scroll-output[ The following code creates a SLR model for this problem and names the model `SLR_sky`. ```r SLR_sky <- lm(height_meters ~ floors, skyscrapers) SLR_sky ``` ``` ## ## Call: ## lm(formula = height_meters ~ floors, data = skyscrapers) ## ## Coefficients: ## (Intercept) floors ## -19.488 4.308 ``` This model contains a lot of different information that is not obvious from the output above: ```r names(SLR_sky) ``` ``` ## [1] "coefficients" "residuals" "effects" "rank" ## [5] "fitted.values" "assign" "qr" "df.residual" ## [9] "xlevels" "call" "terms" "model" ``` With your group, interpret the output of `summary(SLR_sky)`. Answer the following questions and submit your responses to me on Slack. **Question 1:** (a) Write and interpret the estimated regression equation. (b) Find a `\(95\%\)` CI for the estimated effect of the number of floors on the height of the building. (Hint: Use the function `confint(SLR_sky, 'floors', level=0.95)`) (c) Identify the estimate of the variance of the random error. **Question 2:** Which assumptions are required to answer each of the questions above? **Question 3:** How can you evaluate the independence assumption? ] --- ## Group work ### Part I - Solutions .scroll-output[ **Question 1:** (a) Write and interpret the estimated regression equation. .blue[The estimated regression equation is:] `\(\hat{y} =E(Y \mid x) = -19.49 + 4.32x\)`.blue[, where ] `\(x=\text{the number of floors}\)` and `\(y=\text{the height in meters}\)`.blue[. The intercept is not necessarily directly interpretable although one possible way to interpret it in this case is perhaps this is the height of the ground floor or basement of a building. The slope on the other hand is directly interpretable. The slope tells us that for each additional floor a building has, we expect the **average height** of the building overall to increase by `\(4.3\)` meters. The word average is crucial here!] (b) Find a `\(95%\)` CI for the estimated effect of the number of floors on the height of the building. (Hint: Use the function `confint(SLR_sky, 'floors', level=0.95)`) .blue[The code above produces a `\(95%\)` confidence interval from `\(3.82\)` to `\(4.80\)`. That is, we are `\(95%\)` confidence that the true value of] `\(\beta_1\)` .blue[-the true average change in height of the building for each additional floor - is between `\(3.82\)` meters and `\(4.3\)` meters.] (c) Identify the estimate of the variance of the random error. .blue[The summary of the regression model displays the *residual standard error*, or] `\(\hat{\sigma}=25.8\)`.blue[. Therefore the estimated variance of the random error is] `\(\hat{\sigma}^2 = 25.8^2\)`. ] --- ## Group work ### Part I - Solutions .scroll-output[ **Question 2:** Which assumptions are required to answer each of the questions above? .blue[Part (a) and (c) of Question 1 are estimation questions for which we only need to assume the errors have zero mean, constant variance/spread (we'll talk more about how to check this later), and are independent. Part (b) however is a question of inference and so we must also check the assumption that the errors are Normally distributed.] **Question 3:** How can you evaluate the independence assumption? .blue[Our random error in this model is anything that is keeping the number of floors a building has from being an exact predictor of the height of the building overall. Perhaps some floors are higher than others or the overall building height may include the height of components such as rafters or insulation. We want to consider whether or not the error associated in measuring one building is independent of the error associated in another building. Depending on how the data is collected this may or may not be the case. If we randomly sample buildings from a particular region, then the measurement errors are probably unrelated. However if we sample buildings based off of a convenience sample for example, it's possible that their heights are related based on other common factors such as the age the of buildings and construction codes of the time.] ] --- ## Group work ### Part II - Interpreting plots for SLR models .scroll-output[ The following code creates a scatter plot of the data and fits the linear regression line over it (as well as a confidence bound on the regression line). ```r ggplot(skyscrapers, aes(x=floors ,y=height_meters)) + geom_point() + geom_smooth(method='lm') + labs(title="Skyscraper Data", x="Number of floors", y="Height (in m)") ``` <!-- --> ] --- ## Group work ### Part II - Interpreting plots for SLR models .scroll-output[ You can extract the residuals and fitted values from your model with the following code: ```r SLR_sky$residuals SLR_sky$fitted.values ``` Recall you can use the `mutate()` function to add new columns to an existing data object (tibble). **Question 1:** What is the correlation between the variables `floors` and `height_meters`? (Hint: Use the `cor()` function in R.) **Question 2:** What is the coefficient of determination? **Question 3:** Use the following code to create a residual plot of the residuals vs the fitted values and interpret. ```r ggplot(?, aes(x=?, y=?)) + geom_point() + labs(title="Residuals vs fitted values", x="Fitted values", y="Residuals") + geom_hline(yintercept=0) ``` **Question 4:** Use the following code to create a normal probability plot of the standardized residuals and interpret. ```r ggplot(?, aes(sample=?)) + stat_qq() + stat_qq_line() + labs(title="Normal prob plot for the standardized residuals") ``` ] --- ## Group work ### Part II - Solutions .scroll-output[ You can extract the residuals and fitted values from your model with the following code: ```r SLR_sky$residuals SLR_sky$fitted.values ``` Recall you can use the `mutate()` function to add new columns to an existing data object (tibble). **Question 1:** What is the correlation between the variables `floors` and `height_meters`? (Hint: Use the `cor()` function in R.) .blue[Using the R code `cor(skyscrapers$floors, skyscrapers$height_meters)` we find a correlation of 0.94. Correlation can take on values between -1 and 1, values near zero indicate a weak linear association whereas values near the endpoints indicate a strong linear association.] **Question 2:** What is the coefficient of determination? .blue[The coefficient of determination, or R-squared value, is found in the model summary. We are going to use the adjusted R-squared value (more on why later) which is 0.87. Values close to one indicate a strong linear relationship and values close to zero indicate a weak linear relationship. In SLR, the coefficient of determination is just the correlation between the two variables, squared!] ] --- ## Group work ### Part II - Solutions .scroll-output[ To create these plots, it's useful to create a second data object that contains the standardized residuals and the fitted values: ```r skyscrapers2 <- skyscrapers %>% mutate(residuals_std = scale(SLR_sky$residuals), fitted_vals = SLR_sky$fitted.values) ``` **Question 3:** Use the following code to create a residual plot of the residuals vs the fitted values and interpret. ```r ggplot(skyscrapers2, aes(x=fitted_vals, y=residuals_std)) + geom_point() + labs(title="Residuals vs fitted values", x="Fitted values", y="Residuals") + geom_hline(yintercept=0) ``` .blue[Interpretation: We're looking for evidence of a non-constant spread in the residuals, i.e. some type of funneling behavior. We do see some pretty strong evidence of a change in the dispersion of the residuals in this plot. Notice how at the fitted y-values of 50 or 100 or 150 the spread of the residuals is about 1 or two units but when we get to larger fitted values (around 280 and 300), the residuals are spread out by about 3 units. This shows that as the fitted values increase - or for larger predicted heights - there is more variability in the discrepancy between the actual building height and the predicted height.] ] --- ## Group work ### Part II - Solutions **Question 4:** Use the following code to create a normal probability plot of the standardized residuals and interpret. ```r ggplot(skyscrapers2, aes(sample=residuals_std)) + stat_qq() + stat_qq_line() + labs(title="Normal prob plot for the standardized residuals") ``` .blue[Interpretation: We're checking the assumption that the errors are normally distributed by determining if the residuals look Normally distributed. There is some strong deviation from the diagonal line that indicates a Normal distribution, especially for larger values of our standardized residuals. We see some indication here that the largest values of our residuals are even bigger than we would expect if the random error was actually Normally distributed. Thus we have slightly higher upper tail values than we would under Normality.]